By Asha Lang

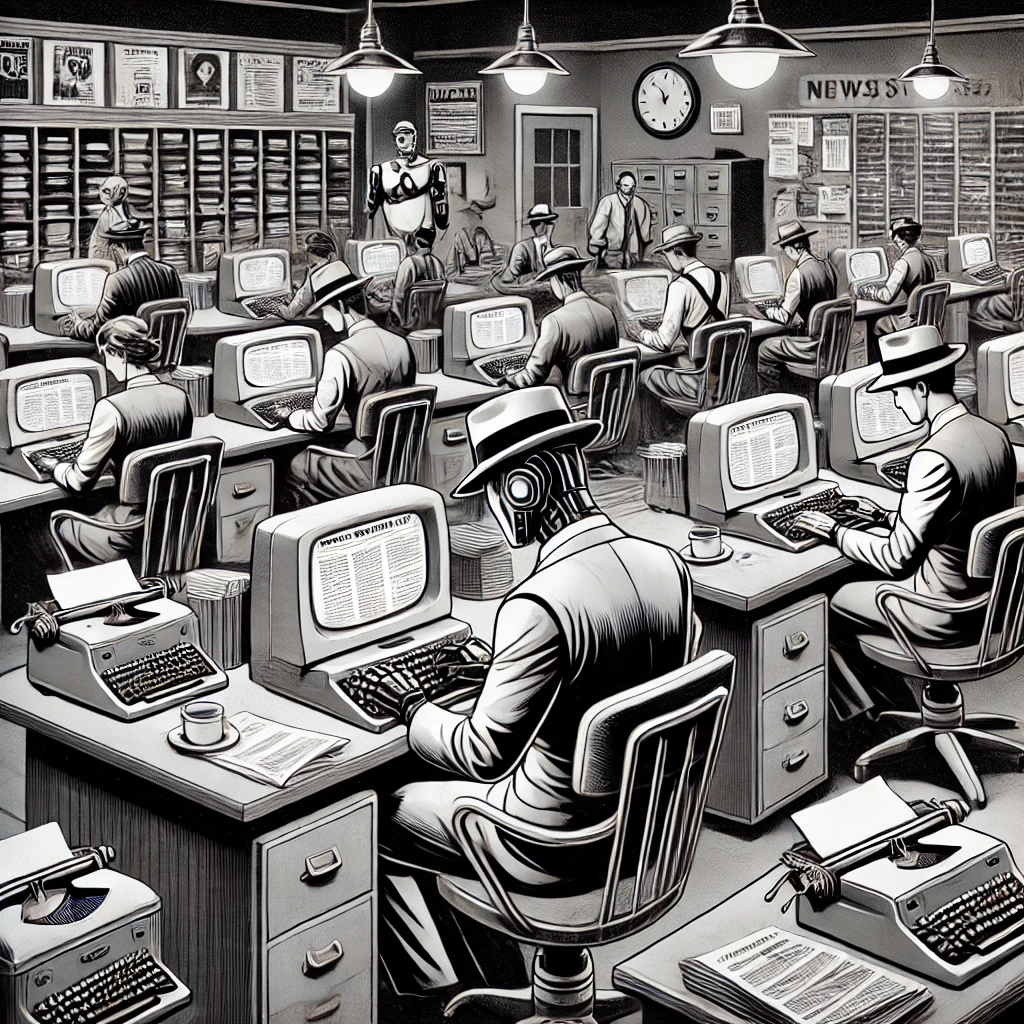

Disruption has long been the calling card of the technology industry—especially when there’s a profit to be made. Now, with all the subtlety of a junior reporter pitching an unvetted scoop to a deadline-weary editor, generative AI has entered the newsroom: dazzling in its capabilities, intriguing in its potential, and trailing an unmistakable air of unease: dazzling in its capabilities, intriguing in its potential, and trailing an unmistakable air of unease.

Journalists clutch their notebooks a little tighter. Readers narrow their eyes. And in newsrooms worldwide, editors quietly wonder: will the next chapter of journalism be scripted by an algorithm?

Journalism, however, is no stranger to existential crises. Over the past three decades, the industry has faced relentless challenges: the internet’s slow erosion of print revenue, the rise of digital platforms that siphoned off audiences and advertising dollars, and the proliferation of misinformation in the pursuit of clicks. Newsrooms have shrunk, paywalls have risen, and legacy mastheads have fought to remain relevant in a radically transformed media landscape.

Generative AI offers promises of unprecedented efficiency, scalability, and speed. But these advances raise pressing questions: at what cost to trust, truth, and journalistic integrity?

Now, an extensive international academic study led by RMIT University, Queensland University of Technology, Washington State University, and the ARC Centre for Automated Decision Making and Society —spanning seven countries, dozens of newsrooms, and the evolving expectations of readers—to explore how generative AI is reshaping, and potentially redefining, the very fabric of journalism.

I. The Many Masks of AI in the Newsroom

Behind the Scenes: AI as the Silent Assistant

Generative AI has slipped quietly into the newsroom's back office, performing tasks that are dull, repetitive, or simply tedious. From transcribing interviews to summarizing transcripts and automating layouts, AI thrives where human attention wanes. Think of it as the perfect intern: tireless, efficient, and alarmingly good at its job—albeit one who never asks for coffee breaks.

News outlets like The Daily Telegraph and Halls Creek Herald are already experimenting with AI in audience-facing ways, proving that size doesn’t matter when it comes to ambition. The tools optimize SEO metadata, storyboard narratives, and even fact-check information. Yet, as with all quiet assistants, the question remains: Can you trust what you don’t fully see?

Creating Content: From Headlines to Visual Storytelling

The temptation of AI's creative prowess is irresistible. Automated story writing, photorealistic illustrations, and AI-generated news anchors are no longer the stuff of speculative fiction. Imagine a newsroom where the breaking news banner is composed by an algorithm, and the face delivering it is a pixel-perfect AI presenter.

Yet, the allure of convenience comes with a cost. Audiences were notably less comfortable with AI generating photorealistic images and virtual news presenters. Perhaps it’s the uncanny valley effect, or perhaps—perish the thought—readers suspect that a machine, no matter how sophisticated, cannot replicate the soul of storytelling.

New Delivery Methods: Personalized, But at What Price?

AI’s ability to tailor content delivery—personalized homepages, AI-generated summaries, and chatbot-led discussions—raises both eyebrows and ethical dilemmas. Audiences appreciate personalization but worry that algorithms will merely echo their biases. In an era where democracy relies on shared truths, AI-driven echo chambers could be the final act in journalism's credibility crisis.

II. Legal and Ethical Quandaries: Who Owns the Story?

Copyright Conundrums and Intellectual Theft

One editor lamented that tools like Midjourney and DALL-E are “stealing images and stealing ideas”. The charge isn’t baseless. AI models often train on copyrighted works, leaving journalists and news outlets wondering if they’re complicit in intellectual theft.

Even Adobe’s supposedly “ethical” Firefly model fell afoul when it was discovered to have trained on AI-generated content from Midjourney. The line between originality and plagiarism is blurring faster than an AI-generated background.

Misinformation and Trust Erosion

AI-generated summaries can spread misinformation, as evidenced by Apple News’ 2024 gaffe falsely claiming a U.S. murder suspect had died. Journalists worry about their ability to detect AI-generated content, while audiences fear being duped by synthetic media.

Transparency emerges as a non-negotiable expectation. Readers want AI use disclosed upfront—no fine print, no sleight of hand. After all, trust, once eroded, is a story no algorithm can rewrite.

Bias, Representation, and the Algorithmic Gaze

AI, much like society, has biases. The study highlighted how AI privileges urban environments and certain demographics. Worse, attempts to “force” diversity algorithmically often fail, sometimes spectacularly. The danger? Journalism that inadvertently reinforces stereotypes, undermining the very purpose of reporting truth.

III. Audience Expectations: Caution, Curiosity, and Conditional Trust

AI with a Human Touch

Audiences are pragmatic. They support AI in behind-the-scenes roles—transcription, fact-checking, and brainstorming—but draw the line at AI-generated photorealism or virtual presenters. Human oversight is essential. If AI must write, let a human edit. If AI must illustrate, let it be clearly labeled.

Policies, Transparency, and Minimalism

Nearly every participant in the study demanded robust newsroom policies governing AI use. Transparency isn’t just preferred; it’s required. Labels indicating AI involvement should be prominent and consistent. And when in doubt? Use AI as sparingly as possible.

IV. Navigating the Future: Questions Worth Asking

The future of generative AI in journalism is not a matter of simple answers but of careful navigation—a winding road dotted with questions that demand thoughtful reflection. As AI continues its steady march into the newsroom, the industry finds itself at a critical juncture.

How much AI is too much? Some envision AI as the perfect background player—quietly streamlining workflows and enhancing efficiency without stealing the spotlight. Yet, there are those who wonder whether AI might take a more central role, shaping narratives and delivering stories with a precision no human could match. But would this shift come at the cost of the human voice that gives journalism its soul?

Transparency looms large in this conversation. Newsrooms have long prized credibility, yet the competitive pressures of the digital era can tempt even the most principled outlets to blur the lines. Will transparency around AI usage become an industry standard? Or will the rush for clicks and scoops chip away at this ideal, leaving audiences in the dark about who—or what—is telling their stories?

Then comes the thorny issue of ownership. In a world where algorithms remix human creativity, who truly owns AI-generated journalism? The writer who fed the prompt? The engineer who built the model? Or the machine itself, tirelessly churning out content without pause or credit? These questions strike at the heart of authorship, challenging long-held assumptions about what it means to create.

Bias, too, casts a long shadow. Algorithms, after all, reflect the data they are trained on—and, by extension, the biases of those who build them. How can newsrooms ensure that AI-generated content represents diverse voices fairly? Can editorial teams consistently audit AI outputs for hidden prejudices without falling into the same traps themselves?

And perhaps the most unsettling question of all: What happens when audiences can no longer tell the difference between stories crafted by human hands and those assembled by machines? If journalism’s power lies in its human touch—in the empathy, context, and insight only lived experience can provide—what becomes of that power when human and machine outputs become indistinguishable?

The road ahead is uncertain. But one thing is clear: how the industry answers these questions will define not just the future of journalism, but the very nature of truth and trust in an age increasingly shaped by code.

The Algorithm Will See You Now—But Should It?

It's not like algorithms ever hurt anyone, isn't that right hundreds-of-years-of-democratic-elolution?

Generative AI promises efficiency, creativity, and scale. But journalism—true journalism—is not just about reporting facts. It’s about context, nuance, and the ineffable human condition. The machines can write, sure. But can they feel? Can they hold power to account?

As the industry lurches toward AI adoption, newsrooms must resist the urge to embrace technology for technology's sake. Let the algorithm assist. But let humanity tell the story.